The importance of data cannot be overemphasized in today's world. Effective data storage and processing is crucial to every organization across different industries. Without the right tools, organizations can encounter challenges that will hinder their success and ability to operate efficiently.

Choosing the right platform for data storage and processing has a lot of effects on the overall performance of an organization's data operations, which is why we will be comparing two leading platforms: Hadoop vs Snowflake. We'll review each platform's key features and discuss which aligns with your specific data needs and development goals.

What Is Hadoop?

Hadoop, also known as Apache Hadoop, is an open-source framework that allows for the distributed processing of large datasets across clusters of computers. Yahoo developed it in the early 2000s to manage large volumes of web data. It's designed to handle massive datasets that traditional database systems struggle with, and Google's distributed computing research inspired this.

Instead of using one large computer to store and process the data, Hadoop divides data into smaller chunks and distributes them across multiple machines for parallel processing. This distributed approach allows for high scalability and fault tolerance.

Hadoop was initially named after co-founder Doug Cutting's son's toy elephant, but it's sometimes called an acronym for High Availability Distributed Object Oriented Platform.

Hadoop uses its main components to store, process, and manage large datasets in a distributed environment. The core components that make up the Hadoop framework include the following:

Hadoop Distributed File System (HDFS)

This distributed file system stores large datasets across the cluster, providing scalability and fault tolerance by replicating blocks across nodes. This redundancy ensures that data remains accessible from other replicas even if a node storing a specific data block fails.

Yet Another Resource Negotiator (YARN)

Yarn is Hadoop's resource management layer. It's responsible for managing cluster resources and job scheduling. It allocates memory, CPU, and disk space resources to run applications efficiently.

MapReduce

MapReduce is a programming model and processing engine for large-scale data in Hadoop that splits the data into smaller chunks and handles them in parallel. The first phase, the map phase, involves breaking down data processing tasks into smaller subtasks and distributing them across nodes in a cluster. Each worker node then processes a subset of the data concurrently and generates intermediate key-value pairs. This allows for the handling of large data in a quicker and more efficient way.

Hadoop Common

This component provides a collection of utilities and libraries used by other Hadoop components.

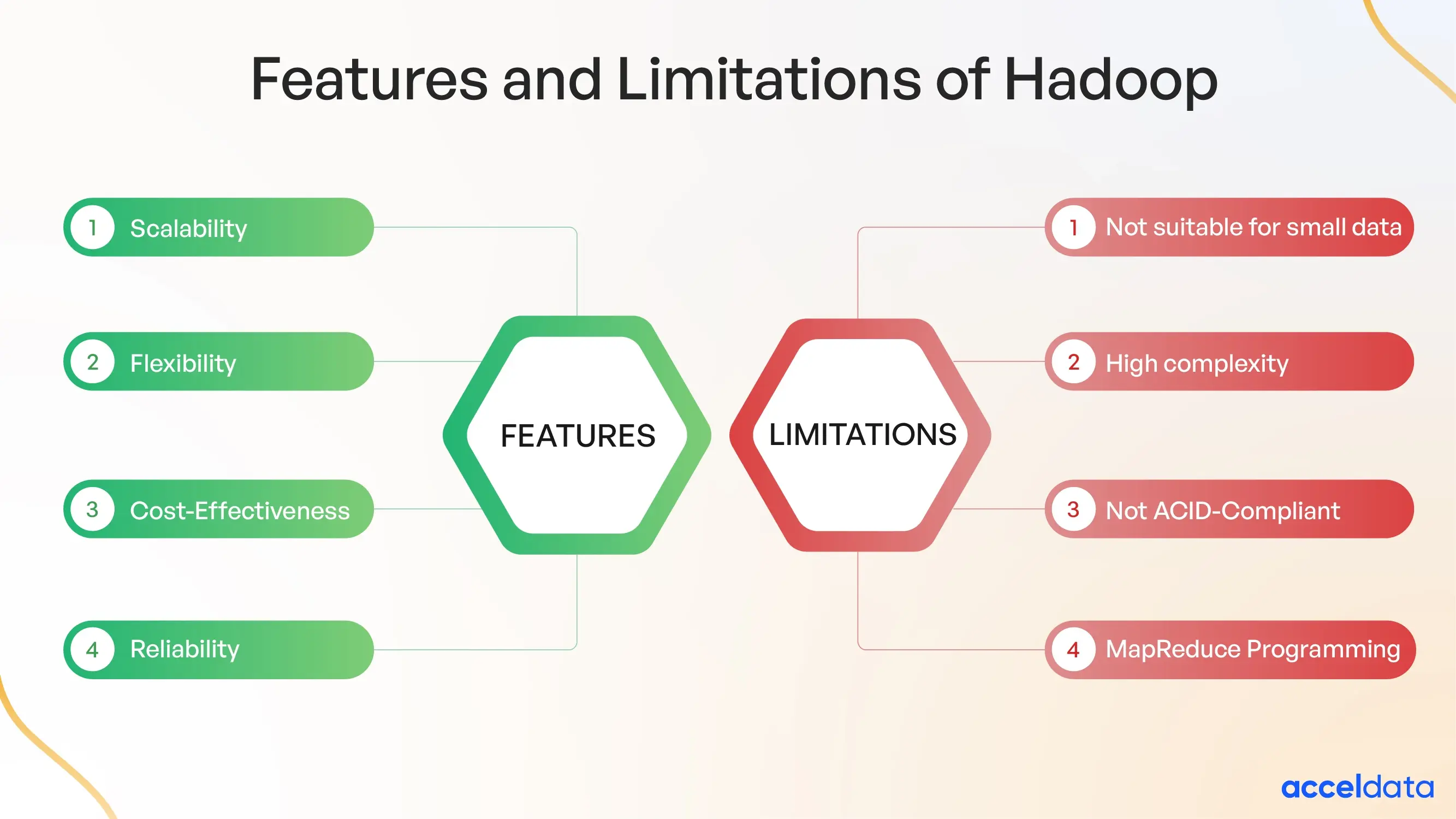

Key Features of Hadoop

- Scalability: Since Hadoop uses a distributed file system to store data, as your data volume grows, you can easily add more storage capacity by incorporating additional servers into the cluster.

- Flexibility: Unlike traditional relational databases, Hadoop allows the storage of various data formats, making it suitable for a broad range of use cases.

- Cost-Effectiveness: Hadoop runs on commodity hardware, which is less expensive than the specialized hardware required for some big data platforms. It's also an open-source project, meaning it's free to use and cost-effective.

Limitations of Hadoop

While Hadoop is a powerful and widely used framework for big data processing, it also has some limitations.

- Not Suitable for Small Data: Hadoop is designed to process large data volumes. The overhead of setting up and managing a Hadoop cluster may outweigh the benefits for small or medium-sized datasets.

- High Complexity: Setting up a Hadoop cluster requires technical expertise in its underlying architecture, which can be a barrier for organizations lacking in-house skills.

- MapReduce Programming: Writing efficient MapReduce jobs can be complex, especially for beginners. Debugging issues in parallel processing workflows can be challenging.

- Not ACID-Compliant: ACID (Atomicity, Consistency, Isolation, Durability) compliance refers to a set of properties that ensures the reliability of database transactions. Hadoop is not ACID-compliant as it requires third-party tools.

What Are the Use Cases of Hadoop?

Here are some of the use cases of Hadoop:

- Risk Management: Financial institutions and insurance companies use Hadoop to analyze large datasets for risk assessment, including analyzing financial transactions to detect fraud and developing customized risk management strategies.

- Logs Analytics: Organizations use Hadoop to process and analyze log data from web servers, applications, and network devices. This facilitates real-time monitoring of system performance and detecting and troubleshooting issues.

- Data Storage and Archiving: Hadoop is a cost-effective solution for storing different kinds of data while ensuring that the data can be used for future analysis.

What Is Snowflake?

Snowflake is a cloud-based data warehousing platform for storing, processing, and analyzing large volumes of data. It's designed to handle the demands of modern data analytics and business intelligence workloads, offering scalability, performance, and flexibility.

Snowflake separates compute and storage resources, allowing users to scale each independently. This separation also means that users only pay for their resources, which is more cost-effective than traditional data warehousing solutions.

Unlike traditional data warehouses that run on-premises, Snowflake is a software-as-a-service (SaaS) offering, meaning you access it through the internet without managing any hardware or software yourself.

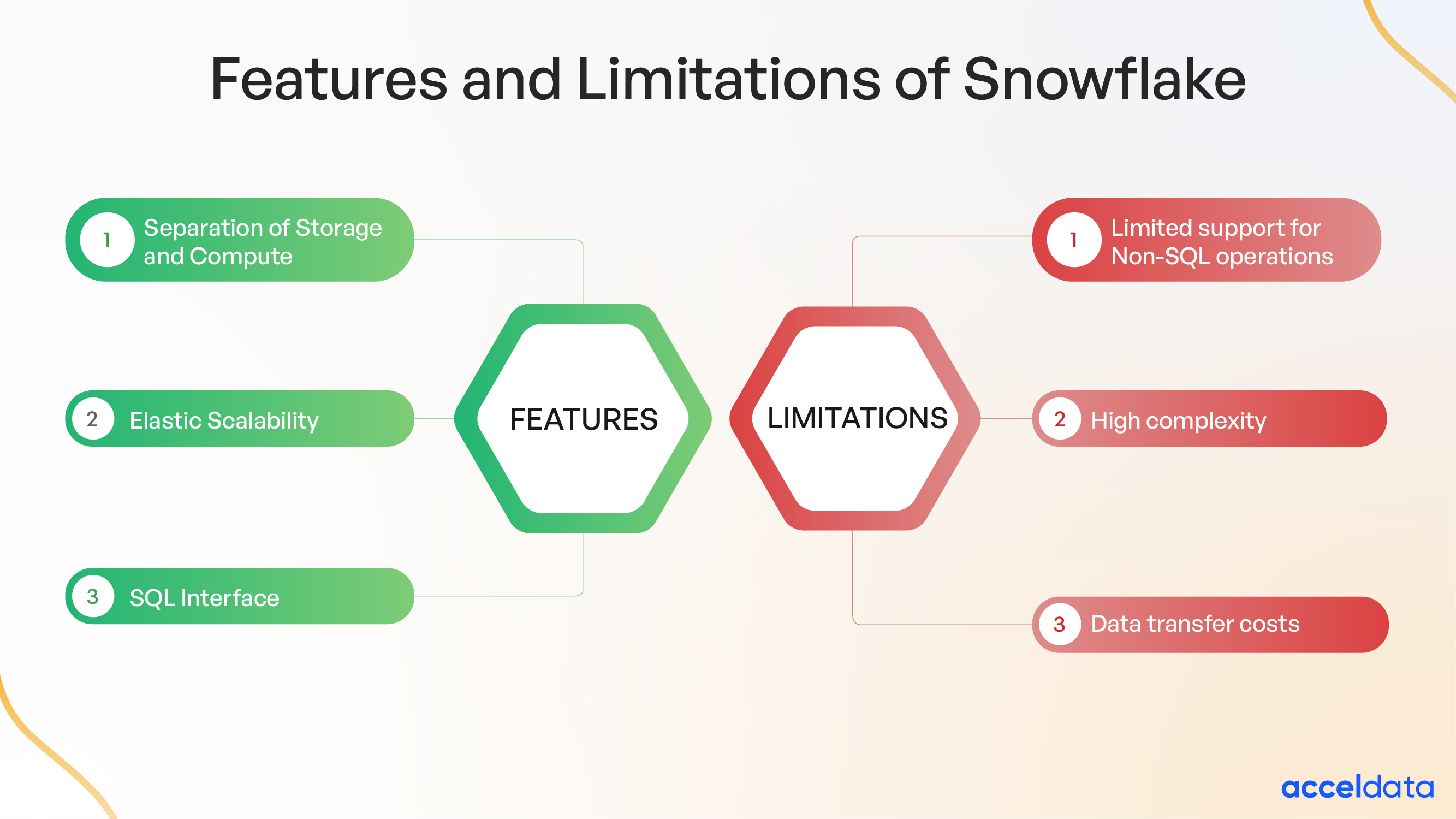

Key Features of Snowflake

- Separation of Storage and Compute: Snowflake separates data storage and compute resources. You can independently scale storage capacity for your data and processing power for your queries, optimizing resource utilization and cost efficiency.

- Elastic Scalability: Snowflake allows you to scale storage and compute resources on demand quickly. This ensures you have the processing power to handle large workloads without worrying about capacity limitations.

- SQL Interface: Snowflake uses a familiar SQL interface, making it easy for data analysts and data scientists to query data using skills they already possess.

Limitations of Snowflake

Like any technology, Snowflake has limitations. Understanding these limitations can help users make the right choices about when and how to use Snowflake.

- Pricing Model: While Snowflake offers a pay-as-you-go pricing model, costs can accumulate quickly for intensive workloads or storing massive datasets. Snowflake breaks down its costs into storage and computing charges.

- Limited Support for Non-SQL Operations: Snowflake is primarily designed for SQL-based operations. Users who require support for non-SQL operations or prefer other query languages may find Snowflake less suitable.

- Data Transfer Costs: Snowflake charges for data egress (data transfer out of Snowflake) and data ingress (data transfer into Snowflake) between regions or cloud providers. Organizations with geographically distributed data or multi-cloud deployments may incur additional data transfer costs.

What Are the Use Cases of Snowflake?

- Data Warehousing: Snowflake provides a scalable and cost-effective platform for storing and analyzing large datasets, eliminating the need to manage on-premises infrastructure.

- Data Sharing and Collaboration: Snowflake allows organizations to securely share data with partners, customers, or other stakeholders via the Snowflake marketplace. This is useful for collaborative projects, where different organizations or departments share data.

- Machine Learning and Artificial Intelligence: Snowflake can be a data lake for storing and managing the vast data required for machine learning and AI projects.

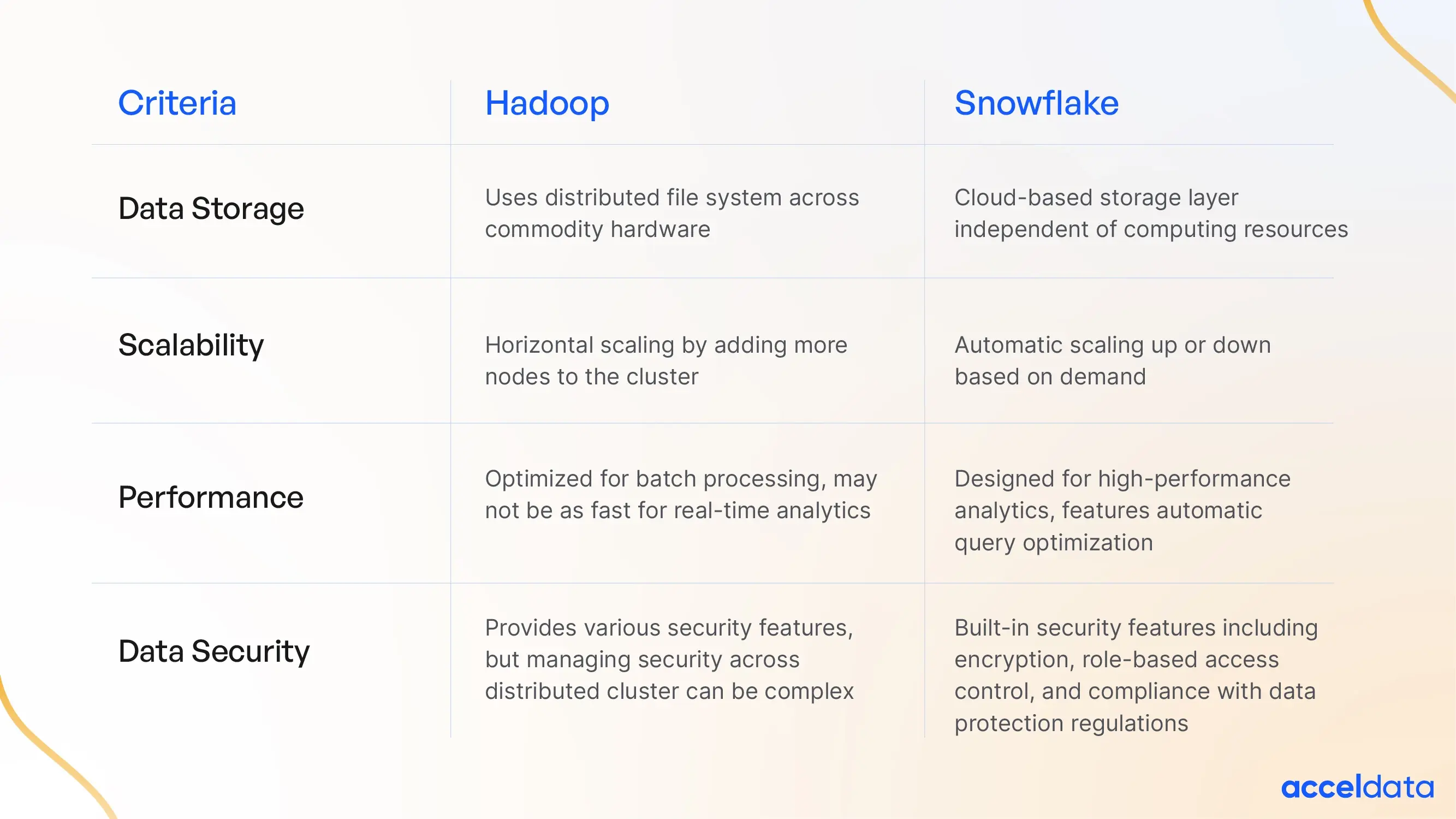

Hadoop vs. Snowflake

Now that you have seen these two data platforms' capabilities, it's essential to understand which one best suits your specific data needs. Let's compare both platforms based on the following criteria:

Data Storage

- Hadoop uses a distributed file system to store large datasets across a cluster of commodity hardware. It breaks down files into smaller blocks and distributes them across multiple nodes for parallel processing. It's designed to handle different data formats, making it versatile for various applications.

- Snowflake uses a cloud-based storage layer independent of computing resources. This highly scalable storage supports various data formats, including structured, semi-structured, and unstructured data.

Scalability

- Hadoop offers horizontal scaling by adding more nodes to the cluster, which increases storage capacity and processing power. However, managing a large cluster can be complex and requires technical expertise.

- Snowflake automatically scales up or down based on demand, ensuring that users only pay for the resources they use. This elastic scaling makes it cost-effective for varying workloads. Its architecture allows for performance optimization through features like multi-cluster warehouses, which can significantly enhance query performance.

Performance

- Hadoop is optimized for batch processing of large datasets, making it suitable for big data analytics. It uses the MapReduce programming model, which can be efficient for certain types of data processing tasks but may not be as fast for real-time analytics.

- Snowflake is designed for high-performance analytics. It features automatic query optimization, parallel processing, and caching to distribute queries across multiple compute nodes for fast query response times and minimal latency, which is particularly beneficial for complex analytical workloads.

Data Security

- Security is not Hadoop's core strength. While it provides various security features like user authentication and authorization, additional security measures might be needed depending on the sensitivity of your data. Managing security across a distributed cluster can be complex and requires significant expertise.

- Snowflake offers built-in security features, including encryption for data at rest and in transit, role-based access control, and audit logs. These features are designed to be easy to implement and manage. Snowflake also complies with various data protection regulations, making it suitable for organizations with strict compliance requirements.

Conclusion

The data platform you choose to use will always depend on your specific needs. Hadoop's distributed file system and horizontal scalability suit organizations with extensive on-premises data infrastructure and expertise in managing Hadoop clusters. On the other hand, Snowflake's elastic scalability, high-performance analytics, and powerful security features make it a suitable choice for organizations looking for a cloud-based data warehousing solution with enhanced security capabilities.

The selection between Hadoop vs Snowflake depends on existing infrastructure, workload requirements, scalability needs, and security considerations.

Maximizing Efficiency: The Role of Data Observability in Hadoop and Snowflake

In conclusion, while both Hadoop and Snowflake offer distinct advantages in data storage, scalability, performance, and security, the importance of data observability cannot be overstated for maximizing their potential. Acceldata Data Observability facilitates comprehensive monitoring, troubleshooting, and optimization of data processes on both platforms.

In Hadoop, data observability allows administrators to monitor cluster health, track job execution, and identify performance bottlenecks. With insights gained from observability tools, organizations can fine-tune configurations, optimize resource utilization, and ensure efficient data processing.

Similarly, in Snowflake, data observability plays a crucial role in monitoring query performance, resource usage, and data access patterns. By leveraging features like Snowflake's query history and resource monitors, users can identify inefficient queries, optimize warehouse configurations, and enhance overall system performance.

Overall, Acceldata Data Observability empowers organizations to proactively manage and optimize their data infrastructure, ensuring that both Hadoop and Snowflake operate at peak efficiency, deliver optimal performance, and meet the evolving needs of modern data-driven enterprises.

.webp)

.webp)

.webp)

.webp)